Everyone’s Using AI Wrong: Here’s a Better Approach

Everyone's using AI to cut corners. Stop making fake AI puppy robot ads and start using AI correctly. Here's how…

*reposted from my Medium article back in April 2025

“I just wrote a children’s book in under two minutes using AI, and here’s how you can do it too,” boasts a woman in a YouTube ad Google thought I’d like. (Google really lets people advertise anything these days, huh?) I find these ads upsetting. Just today, as I’m writing this, I saw another ad for a company supposedly selling little puppy robots fueled by AI. The video was clearly just real puppies and Sora-generated videos of puppies, but I guess some people will think that robot technology is so advanced that we can essentially create robot pups indistinguishable from the real thing? Humanity doesn’t really have the best track record when faced with new technology, and generative AI seems easy to exploit — from get-rich-quick schemes to passing AI-generated content off as human creativity. Regardless, AI is here to stay, so we need to learn to live with it. This begs the question: what’s the best way to use generative AI?

Whether at work or across the internet, I kept hearing people discuss how those who learn to use AI will thrive compared to those who do not. I never truly understood this, nor did I know how to apply the idea. Are we all expected to become “prompt engineers,” whatever that entails?

Don’t get me wrong — I actually really like Artificial Intelligence. As a child, I always wanted my own personal version of Jarvis, Iron Man’s AI-powered super assistant (just without it getting a body and leaving me for Elizabeth Olsen). With each major advancement in AI technology, I feel as though we are getting closer and closer to that dream. But until then, what am I supposed to do with AI right now?

As someone who has always enjoyed various creative arts, I don’t love the use of AI for artistic creation. Yes, for assistance — but not for creation. I find AI images to look weird many times, though this has been improving a lot recently, especially after the controversial AI generative Studio Ghibli art trend. Writing is dull when AI has full reign. Of course, if AI is trained on pre-existing data, and creativity is often the pursuit of something new, then AI is both inefficient and barely a real threat against any real artistic pursuit (or at least that’s what I hope). Yet, AI “art” is how I find most people on the internet use the technology.

So, if creative expression isn’t the right use for AI, then what is? For me, the answer came through my work as an SEO Analyst.

How I Learned To Use AI

My relationship with generative AI began as I started working as an SEO Analyst. AI has made SEO a pain, but my encounters with AI were in an entirely different context. I found myself consistently confronted with AI as a search engine. With tools like Search GPT, Perplexity, and AI Overviews, AI is now essential in the search for information. This began my inevitable use of AI.

AI Search

I type a Google query and often find myself satisfied with the AI-generated answer — especially when the query is not too important that I need to verify the factuality deeply. For more complex queries, I turn to SearchGPT and Perplexity. For example, I might type “What is information gain?” (SEO stuff, please bear with me) into a standard search engine like Google if I want a basic definition. However, if I need a more in-depth explanation of something very specific — such as “What is the relationship between information gain and the Google Knowledge Graph?” — then I might use SearchGPT or Perplexity.

DeepResearch features take things to a whole new level in terms of automating research. I especially like ChatGPT’s version of it. DeepResearch involves AI taking a longer amount of time to analyze the steps it needs to take to answer your question. It’ll go deeper into the internet to find specific information across webpages to relate them to each other. The response takes around 9 minutes, depending on what you’re looking for. My longest response was 11 minutes, when I asked it to search for houses, based on a very specific set of criteria. To my surprise, it worked (kind of)! Two of the houses were no longer available and the 3rd was ugly, but I was surprise with how far it had gotten.

I always advise everybody fact-check the most important pieces of information if warranted. However, for most use cases, this is good enough to get the ball rolling on finding ideas.

AI-Powered Knowledge Base

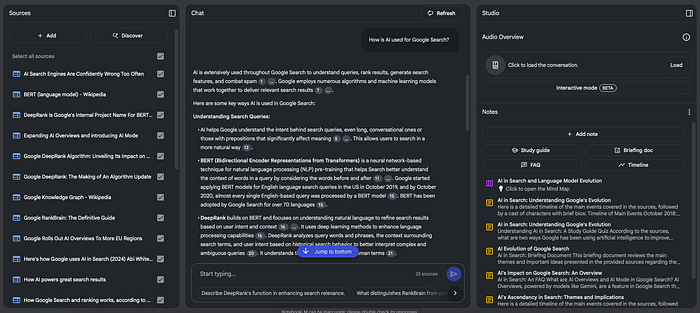

Another AI tool I began using was Notebook LM, which quickly became my favorite AI tool. It works by allowing the user to create a notebook into which they can upload documents, webpages, YouTube video links, and more. The AI is then “grounded” in that information, which greatly reduces one of AI’s biggest flaws: hallucinations. For me, this makes Notebook LM feel like the more trustworthy AI tool for specific things I’m looking to learn about. Therefore, it is my go-to for learning.

Notebook LM is more than a standard text-based chatbot, though. A key feature of Notebook LM is called “Audio Overview,” a podcast-style conversation between two AI people discussing the sources you uploaded. You can either customize the conversation they have or join it yourself to ask questions about specific topics within your sources.

Notebook LM makes learning much easier. I often upload sources I have read but may forget details about, allowing me to revisit them and relate different sources to one another to understand how multiple concepts work together. My first real use case of it was to understand the role that artificial intelligence has within Google Search, in preparation for a presentation. And I wouldn’t have learned nearly as much about such a complex topic if it wasn’t for Notebook LM (Google’s AI, helping me learn more about Google’s AI).

ChatGPT, Perplexity, and Claude all share a feature known as “Projects” (or “Spaces” in Perplexity). These are similar to Notebook LM in that they function as folders where you can upload information and documents to create a centralized knowledge base. Despite the similarities, I believe their use cases are very different. While Notebook LM is ideal as a study tool, I find that folders in other AI tools are more practical for tasks rather than pure learning.

Here is an example of what I mean: You can create a folder in ChatGPT and upload a PDF listing your family’s food preferences. Then, you can brainstorm with SearchGPT to come up with new recipes your family may enjoy, or even upload links to recipe pages and the AI will analyze if it’s a good choice. These chats are saved, so ChatGPT remembers previous interactions, making these folders particularly useful for ongoing tasks where a certain set of guidelines is needed. Notebook LM could be used similarly, but its chats are erased, so there’s no context of previous conversation nor ongoing work.

AI Is Here, So Let’s Use It Well

Humanity, by nature, advances technology without stopping. AI is just the latest massive leap that results from this. There are understandable concerns over what AI can do to job availability for humans, and I feel that. Until there’s some legislation to stop any major downside that may come from this, the best option is to learn how to use AI to know how to not get replaced by it. Maybe we can even use it to become more efficient in our work. The most ethical way forward, I believe, involves honest use of AI tools.

My current favorite use of AI is to facilitate knowledge search and knowledge management, but this is just the beginning.